Significant characteristics of distributed systems include independent failure of components and concurrency of components.

#Parallels definition full#

Fundamentals of Parallel Computer Architecture Using the power of parallelism, a GPU can complete more work than a CPU in a given amount of time. GPUs work together with CPUs to increase the throughput of data and the number of concurrent calculations within an application. The importance of parallel computing continues to grow with the increasing usage of multicore processors and GPUs. Increases in frequency increase the amount of power used in a processor, and scaling the processor frequency is no longer feasible after a certain point therefore, programmers and manufacturers began designing parallel system software and producing power efficient processors with multiple cores in order to address the issue of power consumption and overheating central processing units. The popularization and evolution of parallel computing in the 21st century came in response to processor frequency scaling hitting the power wall. Mapping in parallel computing is used to solve embarrassingly parallel problems by applying a simple operation to all elements of a sequence without requiring communication between the subtasks. Parallel applications are typically classified as either fine-grained parallelism, in which subtasks will communicate several times per second coarse-grained parallelism, in which subtasks do not communicate several times per second or embarrassing parallelism, in which subtasks rarely or never communicate.

#Parallels definition code#

There are generally four types of parallel computing, available from both proprietary and open source parallel computing vendors - bit-level parallelism, instruction-level parallelism, task parallelism, or superword-level parallelism: Parallel computing infrastructure is typically housed within a single datacenter where several processors are installed in a server rack computation requests are distributed in small chunks by the application server that are then executed simultaneously on each server.

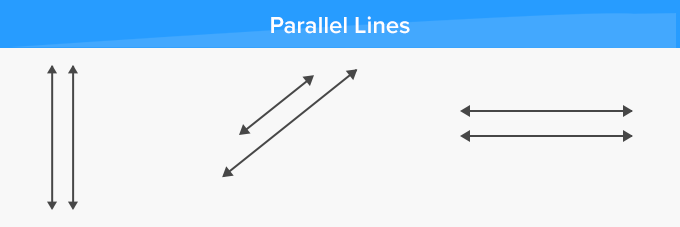

The primary goal of parallel computing is to increase available computation power for faster application processing and problem solving. Parallel computing refers to the process of breaking down larger problems into smaller, independent, often similar parts that can be executed simultaneously by multiple processors communicating via shared memory, the results of which are combined upon completion as part of an overall algorithm.

0 kommentar(er)

0 kommentar(er)